Data Protection Best Practices

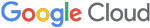

Corporate data theft is on the rise as attackers look to monetize access through extortion and other means. In 2022, 40% of the intrusions Mandiant experts worked on resulted in data loss, an 11% jump from the previous year. Just this year, a major vulnerability in a file transfer software has resulted in large-scale data loss for organizations across the globe (read our research on the MOVEit zero-day vulnerability).

To effectively protect sensitive corporate data, organizations should establish data protection programs that consist of dedicated funding, security tooling, and defined teams. A comprehensive data protection program can limit the impact of an attack and reduce the likelihood of data exfiltration in the event of a successful hack.

Table 1 shows examples of common types of data loss events that organizations face, and potential defensive controls that can be implemented to safeguard against.

| Types of Data Loss | Defensive Controls |

| Publicly exposed cloud storage bucket |

|

| Data exfiltration from corporate network |

|

| Attacker access to a cloud-based mailbox / Inbox syncing |

|

| Loss or theft of a corporate device |

|

| Theft of data from trusted insider |

|

Table 2 includes a non-exhaustive, high-level list of example data protection alerts and corresponding detection use cases organizations often deploy to identify anomalous data theft activity across different platforms.

| Activity | Example Detection Use Cases |

| Bulk Downloads in Azure |

|

| Large Outbound Traffic |

|

| GitHub Uploads |

|

| Identification of File Transfer Utilities |

|

| Suspicious Database Queries |

|

| AWS Unauthorized Data Access |

|

| Google Workspace Data Exposure |

|

| Google Cloud Platform Data Loss |

|

| M365 Data Theft |

|

This blog post outlines common strategies organizations can take to protect against the theft or loss of sensitive internal data. Overall, an effective data protection program can be achieved in the following phases:

- Develop Data Protection Governance

- Identify & Classify Critical Data

- Protect Critical Data

- Conduct Monitoring and Operationalize Alerting

Develop Data Protection Governance

A Data Classification and Protection program helps ensure that appropriate protection measures are applied to systems and applications handling key data. This also allows organizations to better gauge what systems would be of most interest to an attacker. Key policies and procedures should be developed to govern data protection across an organization. These should include the following:

- Data classification policy

- Data access policy

- Data security policy

- Data breach response procedure

- Data retention and disposal policy

Development of the program may require an organization to:

- Create a process to identify all sensitive or proprietary data (see Identify & Classify Critical Data).

- Define and assign data ownership roles, responsibilities, accountabilities, and authorities.

- Establish a risk-based method for the classification of data.

- Provide a method to allow data owners to classify their data with classification labels.

- Develop guidance to dictate how to best safeguard the data of each level of classification (see Protect Critical Data).

- Integrate with the change management processes and the system and data lifecycles of the departments that collect or generate sensitive data.

Organizations should design their Data Protection Program using a risk-based approach and should perform risk assessments to determine threats to data, potential vulnerabilities, risk tolerances, and the likelihood of attacks specific to the organization.

Identify and Classify Critical Data

Identify Data

In order to properly identify critical data, a formal data discovery project should be conducted:

- Perform discovery scans to identify critical data and systems that critical data sets reside in.

- Conduct a comprehensive inventory of all data that exists in the organization (both structured and unstructured).

- Determine where that data is stored, who has access to that data, and how that data is used.

Understand and Prioritize Key Data

A Crown Jewels Assessment can help organizations better prioritize which data requires the most attention and safeguards. As a best practice, a process should be in place to perform a crown jewels assessment for each new data set that enters the environment.

Focus should be given to analyzing data flows and how different types of data move within the organization, with the goal of understanding how data is obtained, processed, used, transferred, shared, and stored. After this has been completed, a determination of the criticality of data can be made.

Protect Critical Data

Data Loss Prevention (DLP) solutions should be integrated at gateways and endpoints to allow security teams to efficiently monitor the movement of critical or sensitive information, both internally and externally.

Access Controls

- Leveraging Role-Based Access Control (RBAC) to assign access privileges based on job roles and responsibilities.

- Utilizing an identity / access management tool to provide privileged access management (PAM) to increase control and visibility around accounts that have access to sensitive information.

- Enforcing the use of strong passwords, multi-factor authentication (MFA), or biometric authentication. If the aforementioned access controls have been implemented, organizations should begin to evaluate Zero Trust architecture to add an additional layer of authentication security.

- Tracking and recording access attempts, including successful attempts, failed attempts, risky sign-ins, impossible travel, etc.

- Regularly conduct access reviews to ensure that access to sensitive data is appropriate. Reviews should be conducted, at a minimum, on a yearly basis for non-privileged users and on a quarterly basis for privileged users or other users with access to sensitive data.

Tools and Capabilities

Tools and capabilities should be deployed to detect potential data loss events. Technical defense mechanisms that can aid in the protection of data include:

- Third-party DLP tools that can detect and prevent data exfiltration by monitoring network traffic, email attachments, endpoint activities, and removable media.

- Native Microsoft 365, SharePoint DLP, and other cloud/application (as-a-service) tools. Read on for Google Cloud Platform (GCP) specific tools and services.

- Network segmentation to create zones or segments that isolate and limit access to sensitive data.

- Disabling USB removable media using GPOs.

- Encryption for data both at rest (e.g., disk encryption through BitLocker, FileVault) and in transit (e.g., SSL/TLS).

- Leveraging a patch management solution to ensure that hardware and software is up to date to lessen attack surfaces that can lead to data exfiltration.

- Firewalls that are configured to deny specific types of traffic in conjunction with a dedicated DLP solution.

- A Database Activity Monitoring (DAM) solution to detect potential data theft and other data misuse.

Microsoft Purview

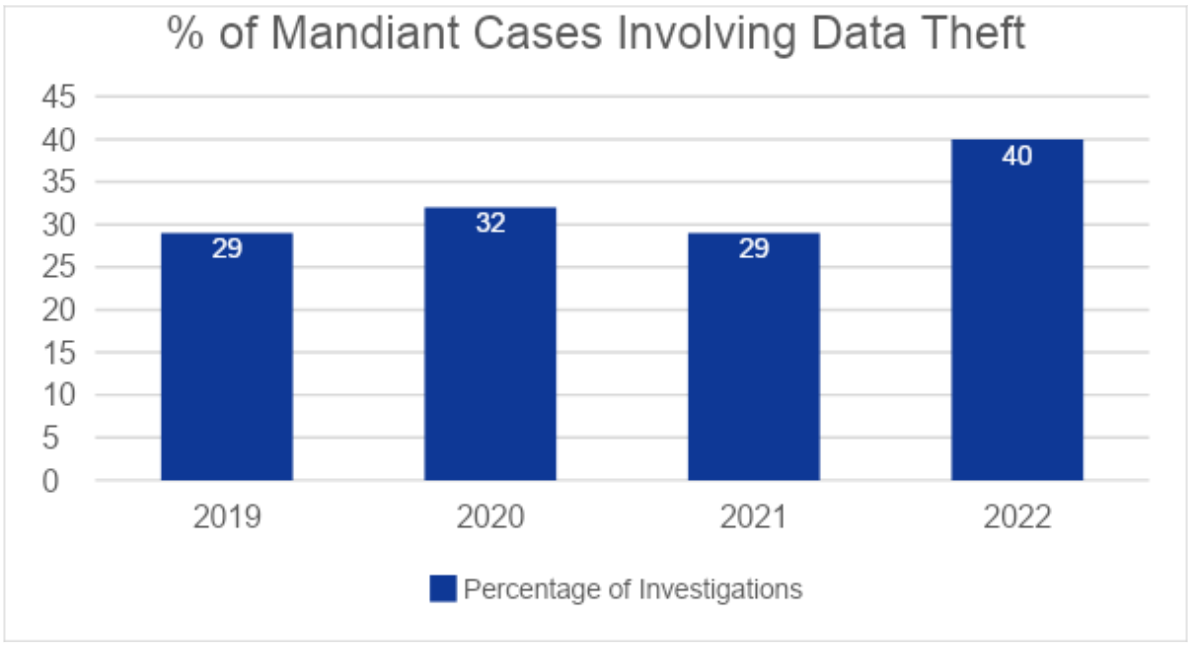

For an organization that primarily leverages the Microsoft ecosystem, Microsoft Purview can be deployed as a DLP solution that combines data governance, risk, and compliance solution tools into a single unified solution. Specific to protecting an organization’s data, Purview can be used to automate data discovery, data cataloging, data classification, and data governance. Specific to data loss prevention, Purview offers “Adaptive Protection” that utilizes machine learning to create context-aware detection and automated mitigation of DLP events.

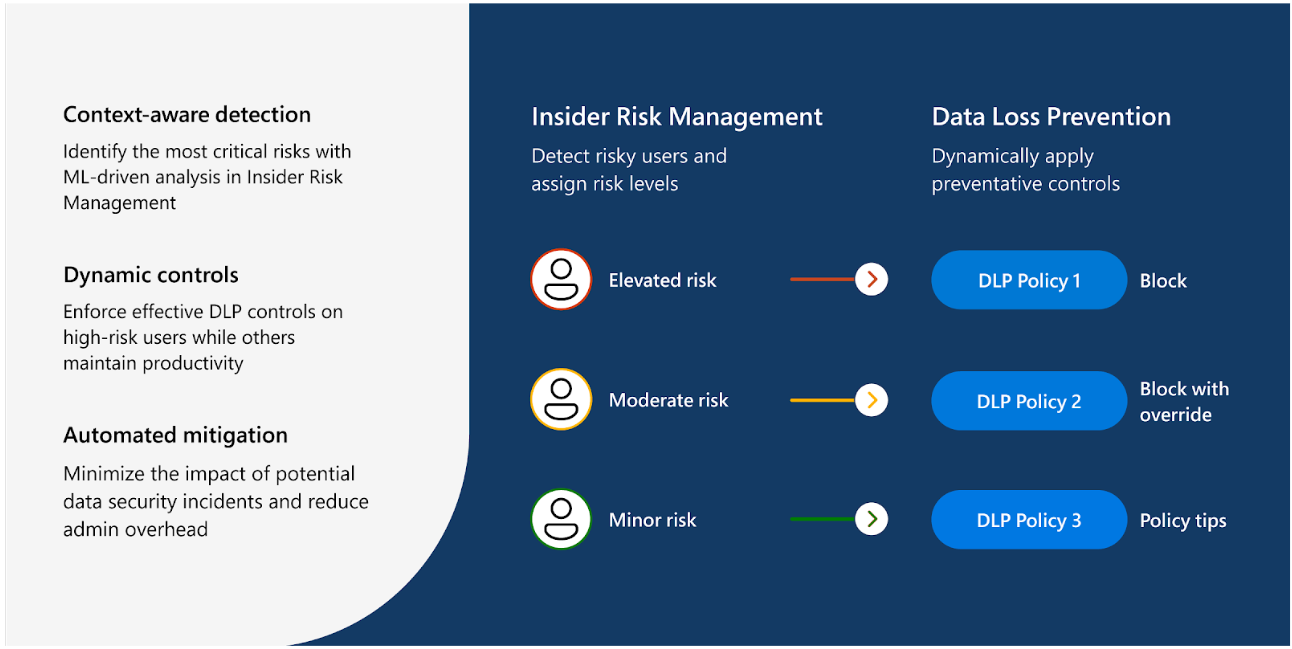

AWS Macie

For an organization that primarily leverages the Amazon AWS ecosystem, Amazon Macie can be leveraged to automate the discovery of sensitive data, access provisioning, the discovery of security risks, and the deployment of protection mechanisms against those risks in the AWS environment.

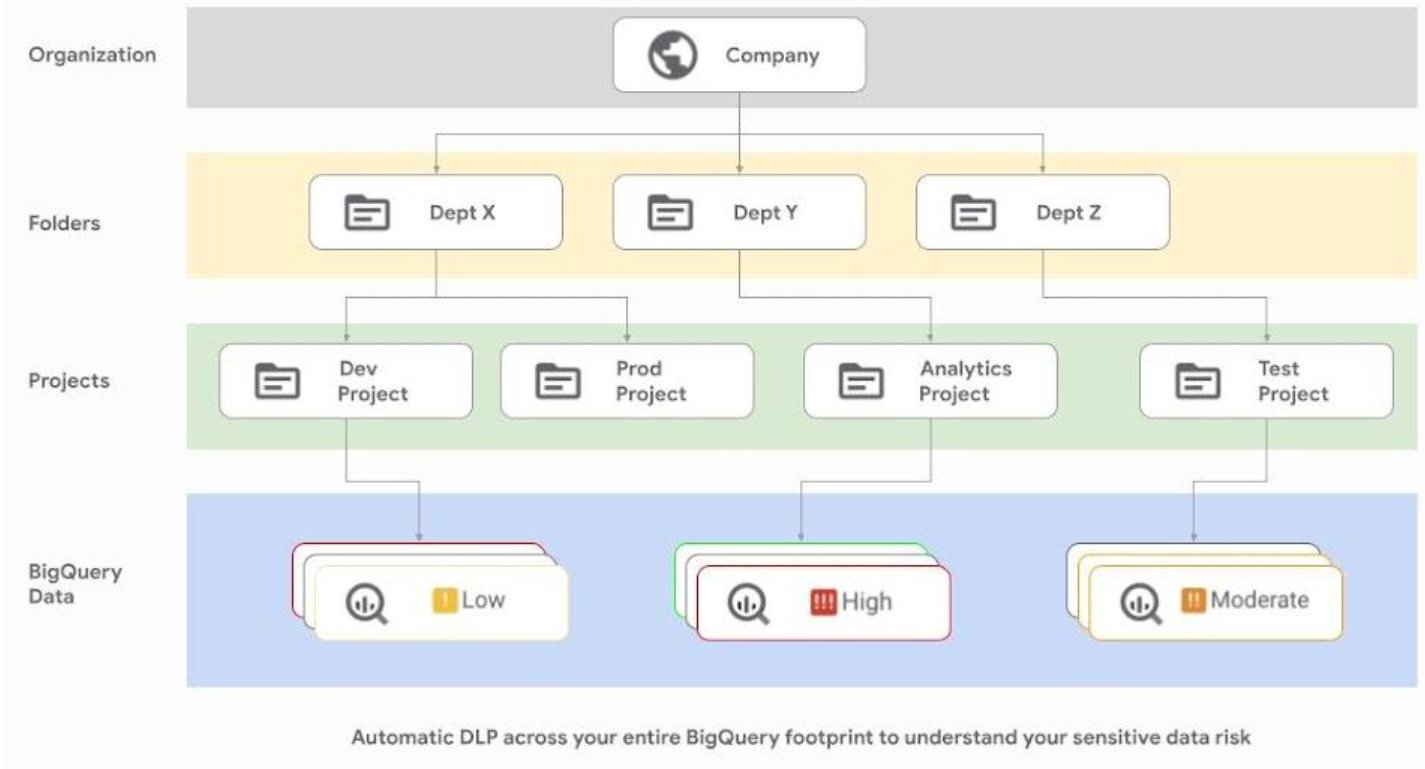

GCP Cloud DLP

Organizations that leverage GCP can leverage Cloud DLP, which helps organizations perform sensitive data inspection, classification, and deidentification. Cloud DLP also automatically profiles BigQuery tables and columns across the organization to discover sensitive data. Key features include:

- Native support for scanning and classifying sensitive data in Cloud Storage, BigQuery, and Datastore and a streaming content API to enable support for additional data sources, custom workloads, and applications.

- Tools to classify, mask, tokenize, and transform sensitive elements to help you better manage the data that organizations collect, store, or use for business or analytics.

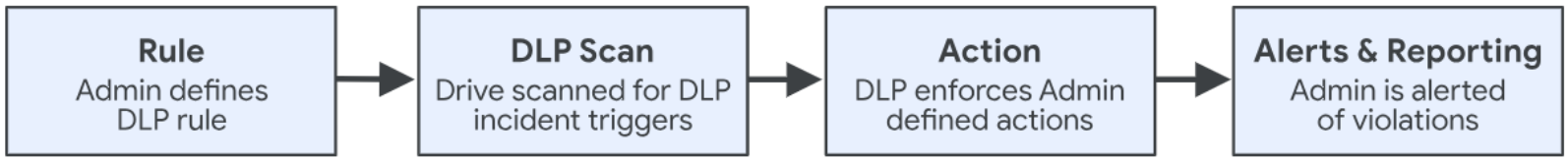

DLP rules can be created to control the content that is permitted to leave an organization’s network. These rules can be established to audit the usage of sensitive content, warn users who are about to share information outside of the organization, prevent sharing of sensitive data (e.g., contains PII, data labeled as sensitive, etc.), and alert administrators about potential policy violations.

Conduct Monitoring and Alerting

Detection Controls

Data loss detection controls are designed to identify and alert organizations about potential incidents or situations where sensitive data may be at risk of loss or unauthorized access. Detection controls should be deployed across the organization’s network, endpoint, cloud, and application assets. User activity should be monitored for suspicious or anomalous behavior, such as accessing sensitive files that the user does not usually access. Logs and alerts from multiple sources should be forwarded to a central repository (e.g., SIEM) to provide timely alerts for potential threats.

Refer to Appendix A for an example mapping of security tools against data protection program elements.

Appendix A: Example Data Protection Program Technology Mapping

Using experience from investigations involving data theft, Mandiant created a non-exhaustive map of data protection technologies to components of an Information Security program. The table should be used to identify potential gaps or overlap in technology coverage, and customized based on an organization’s specific environment and risks.

| Technology Type | Technology Layer | Access Management | Backups | Classification | Data Discovery | Data Loss Prevention | Email Security | Encryption | Endpoint/MDM | Network Security | Security Log Monitoring |

| Data Sensitivity Alerting Tool | Data | ✓ | ✓ | ✓ | |||||||

| Mobile Device Management | Endpoint | ✓ | ✓ | ✓ | |||||||

| Attack Surface Management | Network | ✓ | |||||||||

| DNS | Network | ✓ | |||||||||

| EDR for Endpoints | Endpoint | ✓ | ✓ | ||||||||

| Password Vault | Data | ✓ | |||||||||

| Cloud Data Classification and Protection Tool | Cloud | ✓ | ✓ | ||||||||

| ACL Based Firewalls | Network | ✓ | |||||||||

| Email Security Solution | ✓ | ||||||||||

| Web Proxy | Network | ✓ | |||||||||

| Vendor Management Tool | Network | ✓ | |||||||||

| Backups | Data | ✓ | ✓ | ||||||||

| SIEM | Data | ✓ | |||||||||

| UEBA | Endpoint | ✓ | |||||||||

| File Integrity Monitoring | Data | ✓ | ✓ |

Appendix B: Additional Services and Resources

Additional Google Services

Google Cloud Dataplex is a fully managed data lake service that can be leveraged for organization, preparation, and analysis of data in the GCP environment. Dataplex provides a central repository for data that provides tools to clean, transform, and integrate data for analysis. Important for data protection, Dataplex has tools to automate data discovery and manage access, track usage, and enforce policies. Dataplex can be leveraged to obtain the following capabilities:

- Unified Data Infrastructure

- Data Catalog and Discovery

- Intelligent Data Fabric

- Data Lineage and Compliance

Google Cloud Data Catalog API can be leveraged as a data discovery and cataloging service. This tool enables developers to manage and discover metadata about data assets. It provides programmatic access to create, update, and delete entries in the data catalog. The API allows users to search for and retrieve metadata information, such as tables, views, and columns, from various data sources. It also supports integration with other Google Cloud services, enabling seamless data discovery and governance workflows.

Mandiant Security Services

Mandiant can provide the following independent security assessments to gauge organizational cybersecurity maturity.

- Security Program Assessment

- Insider Threat Assessment

- Cloud Security Assessment

- Mandiant also offers a comprehensive Data Protection Program Assessment. Contact Mandiant for information and pricing.

Additionally, Mandiant can perform rapid security reviews of the three major cloud environments to assess data security practices.

- GCP Review

- Review of externally exposed resources (Storage buckets, Cloud SQL instances, Kubernetes clusters, BigQuery datasets, etc.)

- Overly permissive IAM roles and policies

- Review of Firewall rules

- Logging, Monitoring, and Alerting settings for GCP Services

- Overly permissive service accounts

- Passwords and MFA policies review

- Firewall security rules review

- Finding secrets stored in GCP services (Cloud Functions, Cloud Run, etc.)

- Azure Review

- MFA requirements and enforcement for accounts

- Conditional Access Policies related to Azure access

- Scope of accounts assigned privileged roles in Azure

- Publicly exposed resources such as virtual machines, storage accounts, key vaults, and databases

- Network security groups

- Data protection configuration

- Logging and threat detection settings

- AWS Review

- Identity and Access Management

- Logging and Monitoring

- Data Protection

- Infrastructure Security